Gen-AI and the Amazing Maze of Empathy

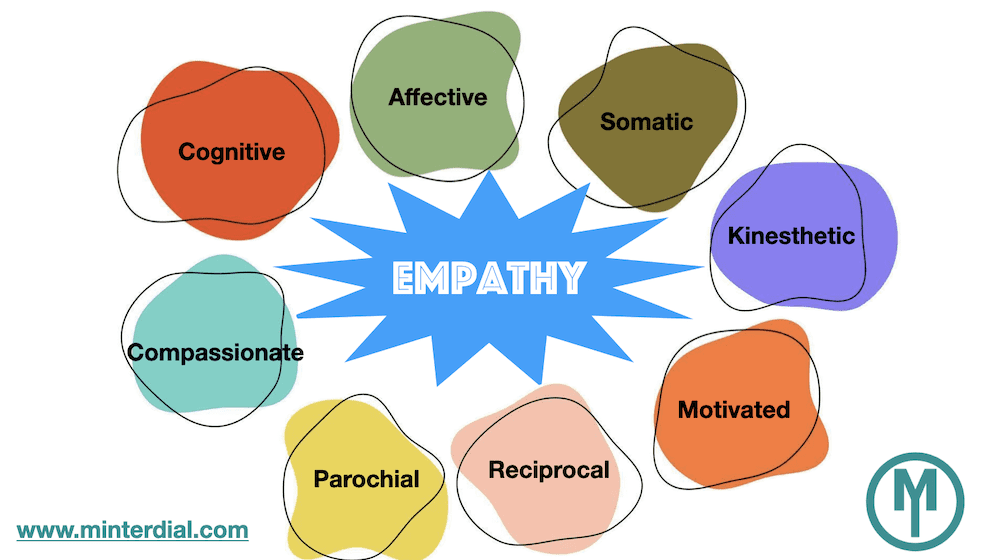

Ask a room full of executives what is empathy and you’ll usually get a bunch of different answers, including someone who talks about walking in the other person’s shoes, as well as some confusion around sympathy and compassion. Ask a room full of experts on sociology and psychology, and the definitions will become far more technical and specific, yet may be equally diverse. You’ll hear about empathic concepts such as:

- cognitive

- affective (or emotional)

- somatic

- kinesthetic

- compassionate

- motivated

- parochial

- reciprocal

- …

This list reminds me of the challenges of classifying certain illnesses with a basket name that, later, end up getting broken down into many different pathologies or types. For example, diabetes. Originally, it was a singular pathology. Then it got split into two (Type 1 and Type 2) that each are very different. And now there are certain schools of thought that point to diabetes being split into five discrete types. Further to the maze of definitions of empathy, there are several unanswered questions about empathy such as the difficulty of measuring it. Another puzzle is whether empathy can exist if it is only cognitive and/or without a subsequent action? Moreover, can empathy ever exist if the person who is the object of the empathy doesn’t perceive it?

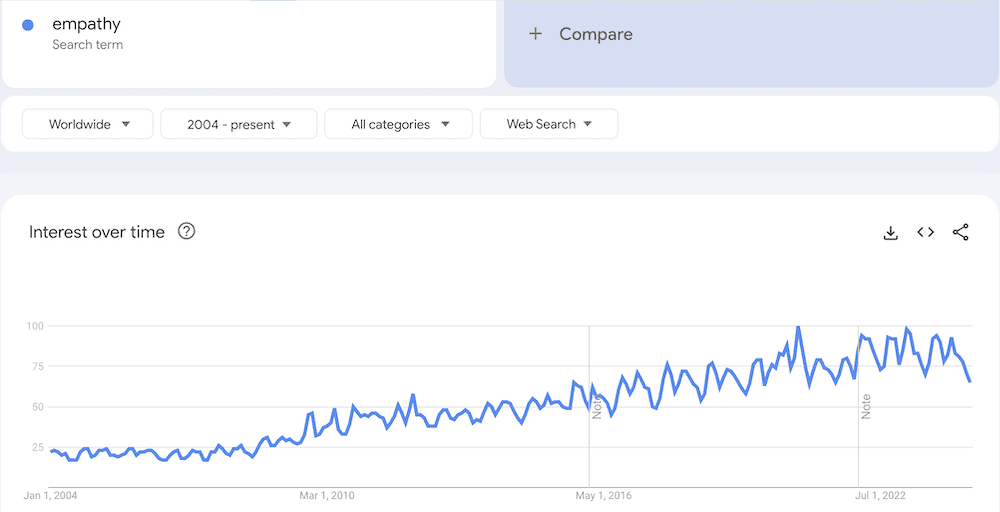

Over the last two decades, per Google Trends, one can see that the term and topic of empathy has attracted a lot of attention globally (with a peak during the pandemic).

The definition and these questions are important for our society because empathy is a quality that’s vital to our connectedness and cohesion. In a world where individuality, loneliness, narcissism and mental health are constantly being mentioned (in the media, with friends and at family dinner tables), empathy is the glue that allows us to understand — and eventually bond with — others with different perspectives and experiences. These questions are also intrinsically important when it comes to inserting or encoding empathy into organizations, or even into a machine, whether it’s a robot, a chatbot or therapeutic AI. What is empathy really? What are its constituent parts? How can you break it down into 0’s and 1’s? How can you evaluate — even measure — it?

I had a conversation with two experts in the field of AI and empathy : Peter van der Putten PhD and Lidewij Niezink PhD (see below a description of each), we came up with a first draft of a first principles definition of empathy. Using a little help from Perplexity.ai to unscramble our 90-minute conversation, here is what we found. Our desire is for as many learned and interested people to add their comments and opinions! Invitation open!

First Principles Definition of Empathy

Empathy is a multifaceted capacity that involves several interrelated skills and processes, enabling an individual to understand, experience, and respond to the perspectives, emotions, expectations and experiences of others. Rather than considering empathy as walking in the shoes of another, where we can end up making it about ourselves (yikes, my feet hurt!), we must balance our focus between self and other, avoiding projection of our own perception on that of the other. The following six principles can be distilled to form a first definition of empathic skills:  Core Principles of Empathy

- Experiential Space Holding:

- Empathy involves creating and maintaining an experiential space where one can tune into the emotions, thoughts and experiences of another without projecting your own judgments or conclusions prematurely. This space allows for a genuine understanding of the other’s state.

- Listening and Reformulation:

- A fundamental aspect of empathy is the ability to listen attentively and reformulate what has been heard to ensure accurate understanding. This involves not jumping to conclusions or giving immediate advice but rather reflecting back the emotions and thoughts of the other person.

- Perspective-Taking:

- Empathy requires the ability to take the perspective of another person. Perspective taking is the ability to understand and consider another person’s viewpoint, thoughts, feelings, and moral expectations. This capacity helps in accurately interpreting and responding to the needs of others.

- Transparency and Intention:

- Being transparent about one’s intentions and self-aware about the limitations of one’s empathic abilities is useful, if not crucial. This principle is especially important in the context of AI, where the system’s capabilities and purposes must be clearly programmed, trained and communicated to users.

- Means to an End:

- Empathy should be viewed as a tool to achieve specific goals rather than an end in itself. The purpose behind empathizing—whether to provide support, build relationships, or achieve a particular outcome—shapes how empathy is expressed and utilized.

- Ethical Considerations:

- The application of empathy must be guided by ethical considerations, ensuring that it is used to genuinely benefit as opposed to being manipulative or having harmful purposes. This includes balancing business objectives with the well-being of individuals. If we’re talking about an entity’s (e.g. an organization) empathy, there ought to be a level of consistency, congruence with one’s core values, as well as taking into account the needs of others.

In the above, we’ve broken down empathy into a set of first principles that, except for #6, can be broken down into specific, discrete behaviors. The ethical considerations are inevitably relative. It belongs to each person to establish their ethical position and intentions. In this approach, we are making an abstraction of the different types of empathy in order to arrive at a more humanistic, less academic expression. If we want Gen-AI to display empathic capacities, we believe this is the way to go:

- Decide when, where and why to be empathic

- Define empathic skills and operationalize them in discrete behaviors

- Program and train these behaviors in AI, whether generative or other forms of AI

- Evaluate and reiterate the process.

We welcome your thoughts!

About Lidewij Niezink, Ph.D.

Lidewij Niezink is an independent scholar and practitioner focused on the development of empathy in all its aspects. She is a co-founder with Katherine Train of Empathic Intervision, an author, and an Applied Psychology teacher with a Ph.D. from the University of Groningen in The Netherlands. She develops evidence-based interventions and education for diverse organizations and writes and speaks on empathy for scientific, professional, and lay publics. Lidewij is cofounder of the Empathy Circle practice. https://www.linkedin.com/in/lniezink/

About Peter van der Putten, Ph.D.

Peter van der Putten is Director of Pegasystem’s AI Lab and also an assistant professor in AI at Leiden University, where he studies what he calls Artificial X, with X anything that makes us human. Peter has a MSc. in Cognitive Artificial Intelligence from Utrecht University and a Ph.D. in data mining from Leiden University, and combines academic research with applying these technologies in business. https://www.universiteitleiden.nl/en/staffmembers/peter-van-der-putten#tab-1