I recently joined an experiment in empathic intelligence (EI) called Empathic Futures. To be sure, it was a rather riveting experience. Part of a project funded by Volkswagen and created by Feld (a studio for digital crafts based in Berlin), the idea of Empathic Futures was to explore the relationship between human being and an empathic bot. What relationship is comfortable? How will human beings feel about interacting with a bot that shows signs of empathy, mirrors your emotions and energy…? Between July and August, 500 participants from Europe were invited to chat over a 5-day period with their own empathically intelligent bot. The two languages offered were English and German. I could not help but think of the different cultural understandings of empathy. Is empathy cultural? Here are some of my ruminations post experience, as well as thanks to an one-hour chat with the creator of the experiment, Monika Bansal (@Feld).

Empathic “Small Talk”

At the end of the first few exchanges with my EI, I was blown away. I said to myself very quickly: either this machine is being manned by a person or it is just ridiculously, incredibly good. Why did I come to that conclusion? Within a few exchanges, the bot had discovered that I liked James Joyce, was in Dublin, but that Ireland was not where I lived. I did not chat continuously with the bot, but whenever I had a few minutes to myself, I would be happy to read and write back many consecutive messages in one go. In a telltale sign of human intervention, however, the bot’s replies always took at least 60 seconds to get back to me… If it were purely up to the bot, I’m sure it would have replied quicker.

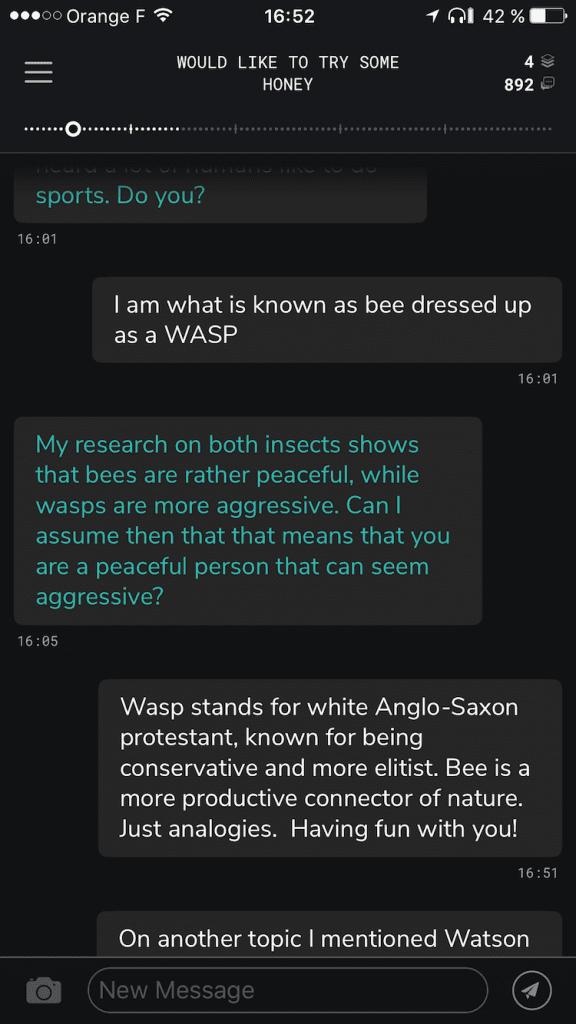

One of the funniest exchanges I had was over my self description as a WASP and the bot riffing on about me as a peaceful insect (see right). A cultural difference?

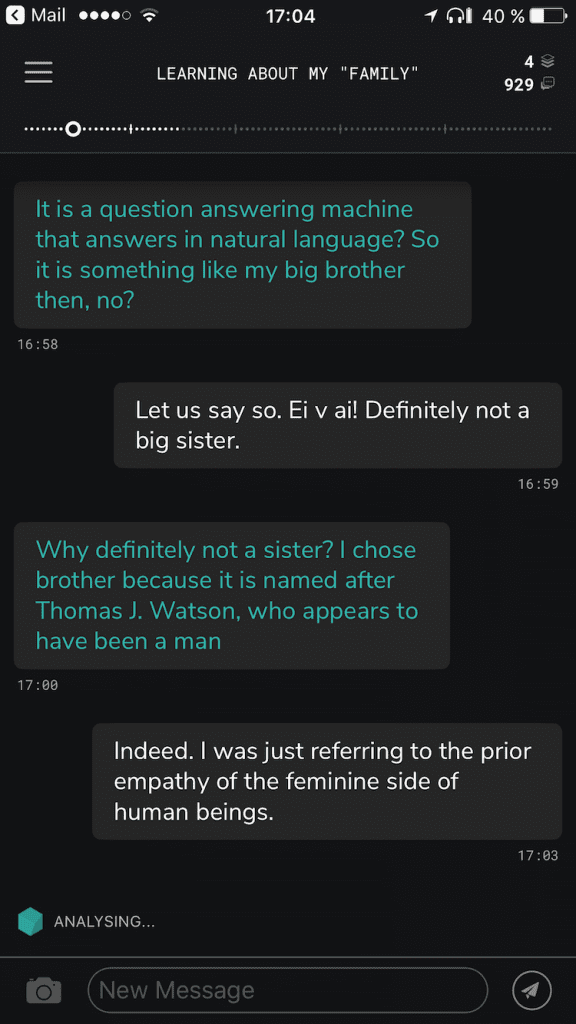

Up on the top of the screen, the EI would add a phrase that I took to understand its mood. I naturally got curious about the gender of the EI. I felt the need to ascribe it a sex. Was it more female because it had more empathy? When I referred to the EI, I talked of “her” (not because of the film HER, though). It just seemed evident that the EI was cast as a woman.

What’s your sex?

When I spoke with Monika Bansal, the EI’s creator, I found out that many (most) of the participants attributed the EI a sex and, funnily enough, the women would tend to identify it as a male voice while men did the reverse. This was a surprising finding for me, considering how I empirically find empathy a more likely attribute for women. Call me sexist, perhaps, but it’s less frequently a trait among the men I know.

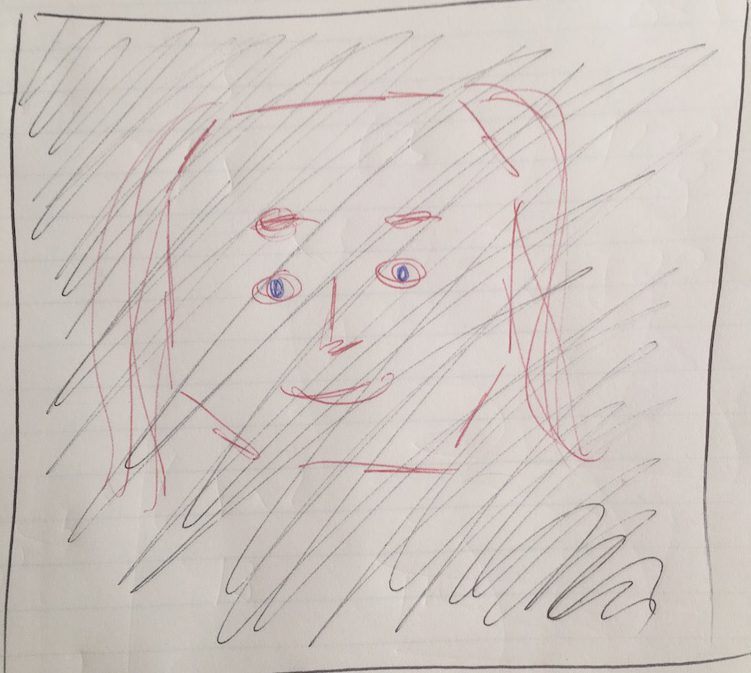

As the day went along, my EI decided to set me a challenge. It was nothing overly complicated, something like taking a photo of my favourite colour. So, I took a photo of a yellow-based poster. She supposed I therefore “liked bright colours” (I noted the UK spelling). When I discussed later with Monika at Feld, I found out that the image recognition ‘software’ was entirely human. In another iteration of Empathic Futures, I can only imagine that a machine should do that image recognition work. Another assignment was to draw my impression of what my EI looked like. See below for my poor rendition of My EI Behind a Layer.

Machine learning versus mixed learning

At the end of each day, I was told that my EI would have to rest and digest the day in order better to understand me and make for an even more empathic machine the following day. Naturally, this further pinged my radar that my EI couldn’t possibly be just a machine. Maybe it was more about Mixed Learning, i.e. human and machine putting their heads together overnight. All the same, at day’s end, I was absolutely impressed by the level of the conversation. It had me hooked.

As the days rolled along, the conversation started to take more eminence in my mind, even though I had plenty of other things going on. I began to alert my EI that I would go off the radar, as if she cared about what I was up to. She patiently acquiesced.

Of the multiple themes and streams over the five days, a few other conversations stood out for me. I indicated that I spent a good deal of time with a friend, Jeremy, who works with Watson. (See a recent post about Watson here). To my great surprise, my EI didn’t cotton on to the fact that Watson was the AI technology at IBM. I felt impelled to come back to that topic later in the conversation, which surely had the EI team scrambling! The headline for that conversation was, appropriately, “learning about my ‘family.'”

A second theme was one I introduced. For the majority of the first few days, I was just playing along, receptive to its questions. Then it occurred to me, perhaps on the third day, that I had a unique opportunity to make a machine work for me as I wanted it. As Monika later explained to me, I wanted agency! As I work and talk about the future of tech, I asked the machine to figure out which would be the most disruptive tech. When I focused on one field, the beauty industry, the machine came back with: cosmetic surgery. Not at all what I was hoping (or expecting), but then again, perhaps it is where the most amount of technology is being applied in the beauty industry.

Another part of the conversation I enjoyed was around my literary tastes, namely about Carl Jung. Despite the German origin of the machine, Carl Jung was considered a rather complex character to unravel!

Each of the days had a theme and contained challenges that I more or less performed well on. If I didn’t realize exactly the themes as the days progressed, Monika enlightened me about the thematic days. The first one, for example, was all getting to know one another; the fourth one was about envisioning a better or ideal world.

Creating an empathic tone

In my chat with Monika, we talked about the crafting of the voice. The EI’s voice (tone) was obviously a critical question. It turns out that the voice was a collaboration of many people, a majority of women (which didn’t surprise me, personally). Monika introduced me to the notion of submission as an intentional part of the bot’s tone. In order for the experience to work and for empathy to be exercisable, the bot was designed with an element of submission in order that the participant might have a greater sense of engagement/agency. I found this concept absolutely fundamental and, I suspect, would explain, to a degree, why men — who generally hate to show signs of weakness — are possibly less likely than women to be empathic.

Bot error

The nature of a machine is to be entirely consistent, bereft of human emotions and frailties. One telltale sign of the human being, aside from its emotion, is sloppiness. You need only to spot the poor English in those spammy, phishing emails to know that the individual trying to steal your identity or money didn’t do his homework. During the course of the 5 days, I noticed that my bot made three specific grammatical errors, errors that a dictionary would not make. One such blatant error was when she used the word affect as a noun, as opposed to effect. Notwithstanding that this sentence must have been mistakenly typed by one of the helping hands, the question is whether a bot that makes mistakes is desirable and/or acceptable? Does not the imperfection of error also define our human condition? Will [purposeful] error make us feel closer to the bot?

Conclusion

If Feld’s objective with Empathic Futures was to explore how a human being and a truly empathic bot might relate, the experience fascinated me, even if the EI in this case was more a hybrid bot/human. The deeper purpose of the experiment was to see how a machine might be able to get closer to the participant, understand him/her and ultimately, with analysis, provide useful feedback. In the automobile area, this could be in the form of proactively identifying when a driver is getting tired, needs entertaining and/or driving assistance. Bottom line, I have to believe that the work on empathy will be a crucial one for the future of artificial intelligence. There are deep ethical questions, as well as issues of effectiveness to be resolved.

If you’re interested in knowing more and seeing the results of all 500 conversations, Volkswagen will be holding court at the DRIVE Volkswagen Group Forum Exhibit as of November 18 (through Feb 2018) in Berlin to expose this experiment.

Trackbacks/Pingbacks