In today’s busy life where customers have a lot of choice, a heightened level of impatience and limited resources, it is ever more difficult for a brand to achieve consistent customer satisfaction and lasting loyalty, much less customer advocacy. Among the available tools, it is only normal that brand marketers wish to send customer satisfaction surveys to understand where they sit and how they have performed. However, it’s clear that some brands (and their agencies) are absolutely out of touch with their customers. As much as I remain a devout fan of Air France — through thick and thin and the occasional travel travesty — I was absolutely gobsmacked by the survey I recently received from them after having called in to make a reservation for a last-minute flight to the US (from Paris).

Customer satisfaction – the right intention, but…

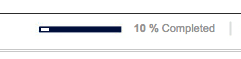

If the total amount of time to make the reservation took about 15 minutes, it seemed completely out of proportion to receive this request to complete their survey… The email accompanying the survey did not refer to the length of time necessary to fill in the survey. And, that, it turns out, is with good reason. Had they written down that the survey would take over 10 minutes to fill in, it would have been a non-starter. As I had filled in the first three questions (c. 3 minutes), I found the “completion bar” up top at just 10%.

Don’t make the process unsatisfying

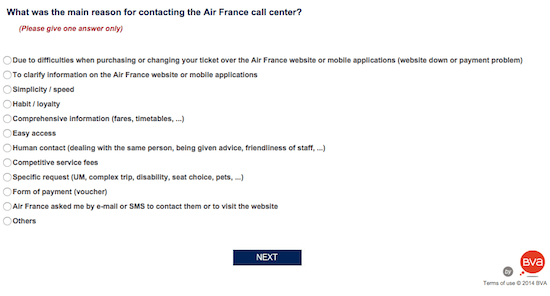

The following question was an eye-sore and completely deflated any desire I had to continue. Not only was the amount of text to read OTT (over the top), I had to read all the possible answers to provide the single most suitable answer. I was looking at spending closer to 30 minutes of my time to fill in this survey. Unlikely! On top of that, there was zero reward for me — not even a little bone.

The truth is that the survey went much quicker thereafter. The percentage bar seemed completely inaccurate throughout. The last question showed 89% completed! In the end, I managed to rush through it in less than 10 minutes. That said, I noted that there were a few typos in the English language version.

The role of the agency…

Not only is a survey too long going to cause substantial falloff, those who finish it will start to provide lackadaisical answering. {Tweet this ♺!} BVA (the agency) clearly did not provide appropriate counsel to Air France about building an effective questionnaire. That is basically a professional error in my opinion. In its current form, the chances of having a representative pool of people answer this survey completely, thoroughly and accurately is just wholly unlikely. The AF marketing team surely believed that they were covering every base (that way the boss could not fault them for getting in everything). Meanwhile, BVA surely charged for the time of preparation. Even in the question above, it reads very oddly that one is asked for only one answer and “others” (plural) is an option. Moreover, “others” cruelly doesn’t allow for you to elaborate.

The brand’s directive

If the little emoticon survey you now see popping up everywhere is a little light in terms of customer satisfaction surveying, it has the benefit of being extremely quick and easy. Over time and spread out over many locations, it can no doubt be an effective tool. In the case of the online/telephone call, as in the AF case here, I would be in favor of a quick email right after the survey that asks no more than 3 quick questions, one of which is the “moneyball” question (i.e. the KPI that will really make the difference and against which the organization will measure its success). That moneyball question must be in EVERY survey. The others could change over time. In the above case, a survey that takes more time than the time of the service itself is just unthinkably wrong. What a brand marketing team needs to do, as it looks at the different questions they would like to ask, is to make sure that they are focused on the most important criteria and reduce the questionnaire to the essential. Prior to the send-out, the team must be clear on what they are going to do with the responses and how are they going to measure against the improvements made/being asked? Are the questions asked aligned with the internal processes and goals & objectives of the different departments? A one-off survey that will not be repeated (as this one surely will not be) is not going to help move the “peanut” forward, as my old boss used to say.

And, one last thing, don’t make the text so small. The small print may be an attempt to “shrink” the size, but the end result is an eyesore.

Your thoughts?